Google I/O 2022 promises a better life through tech

After a long Covid break, the Google I/O conference finally took place in front of a live audience. The event commenced with a powerful statement by the CEO Sundar Pichai that echoed throughout. “Technology has the power to make everyone’s lives better” were the words that also resonate with the message of Emakina’s book, Visions of a better world. Amidst the internet being flooded by the Android 13 news, we have decided to highlight the technological advancements that intrigued us most.

Advanced translation, Street View, TL;DR and Project Starline

Technology is increasingly being used to help during trying times. In the war in Ukraine, real-time translation is helping refugees communicate with the people hosting them in their homes around the world. Google’s Zero-Resource machine translation approach enables translation for languages with no in-language parallel text and no language-specific translation examples.

The conference also highlighted the impressive usage results of the Google Maps eco-friendly routing road suggestion. The feature is set to run across Europe soon.

Street View comes with improvements, too. Combining machine learning and 3D in conjunction with fusing the aerial and street-level images now enables the immersive view. This view includes viewing how busy an attraction is, the traffic around it and weather progression throughout the day.

Google’s TL;DR feature (too long, didn’t read) feature that enables the summarisation of long documents will now be a permanent feature of Google Docs. Google Chat will get a similar feature, allowing collection of the main conversational points as bullet points.

One project that our DXD and Insights team took particular interest in during Google I/O 2021 is Project Starline. Project Starline is a communication method, in development by Google, that allows the user to see a 3D model of the person they’re communicating with. Since its inception it has shown major improvements, and they’ve since started deployment with enterprise partners.

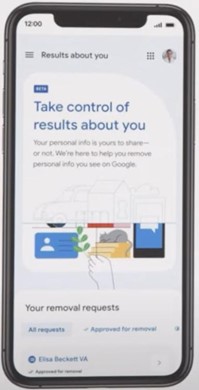

Google Search and skin tone recognition updates

Google search is becoming more user friendly than ever, allowing the user to decide what information they want or do not want displayed. This comes particularly handy when there’s unwanted information such as the home address or mobile phone number showing up on search results. As pointed out during the conference, it’s important to know that although Google can do their part in order to get this info taken down from the Google Search, the information will still be on the web – just not as easy to find.

Google Lens, which was launched in 2017, got a multisearch feature update. Google Lens is an image recognition tool delivering relevant information about objects it identifies using visual analysis. Multisearch allows people to search with both images and text at the same time. For example, if the first result isn’t satisfactory, the user can add additional text input describing exactly what they’re looking for, in order to get better search results.

For years, Google has been working on improving skin tone representation across Google products. In partnership with Harvard professor and sociologist Dr. Ellis Monk, they’re releasing a new skin tone scale designed to be more inclusive of the spectrum of skin tones we see in our society. The MST Scale will help build more representative datasets so we can train and evaluate AI models for fairness, resulting in features and products that work better for everyone — of all skin tones. For example, the scale is used to evaluate and improve the models that detect faces in images.

A more “human” Google Assistant and LaMDA 2

Simple Google Assistant updates this year are proving to be of great significance to the user experience. Users can now launch them without saying “Hey Google”. The device waits for the user to establish eye contact and starts listening to the commands automatically. Selection of tasks like asking for the lights to be turned off don’t even require eye contact.

LaMDA 2, the follow-up to the AI system LaMDA, is an AI system built for “dialogue applications,” and can understand millions of topics and generate “natural conversations” that never take the same path twice. Alongside LaMDA 2, Google unveiled AI Test Kitchen, an interactive hub for AI demos where users can interact with the models in curious ways, like exploring a particular topic and drilling it down into subtopics within that topic. Users can mark offensive responses as they come across them. To give you an idea of just how advanced this model is, the GPT3 language model has 175 billion parameters, whereas LaMDA 2 has 540 billion.

A final thought

2022’s Google I/O has once again proven to be very rich in new features and improvements. But the conference isn’t just about announcing brand new projects and technologies – it’s about summarising what they’ve been working on over the past few months. For all keynotes and announcements that were made, we encourage you to have a look at the full programme. We can’t wait to see what will happen in the coming months as we start to adapt and integrate these technological advancements in our work.

Our recent blog posts

See all blogs-

How is AI’s synthetic data enhancing User Experience Research? Technology

-

Web3.AI Rising : How new technology can add value to your business

-

How generative AI helped us create an e-commerce app – with personalised content – in just 2 weeks Technology

-

Can you build a foodie app in 3 days using Generative AI? (Spoiler alert: yes!)